Correct.

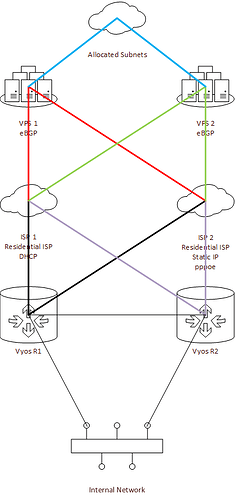

I’d use OSPF to show BGP where to go - the VPN mesh and dummy interface IPs only. BGP->VPS for your prefix announcement (obviously), and it’s up to you what you want to import back to prem. Under most circumstances, I wouldn’t push outbound traffic over tunnels into the VPSes if at all possible. If the ISPs will allow traffic sourced from your prefixes going outbound without filtering, do that.

Normally, with an entire /24 or larger for servers, I’d allocate IPs directly, avoid DNAT and firewall if required. If you want to use it, you can use interface groups and conntrack sync so that NATs can shift around and fail over between prem routers.

I can’t help much with this on specifics, I’ve done a lot of basic NAT with VyOS but not since the nftables change and not with conntrack sync.

That’s the idea, yes. One configuration at the hub end, then one simple config per hub on each spoke. Under the hood, it’s effectively dynamically established IPsec/GRE.

DMVPN simplifies configuration a bit, especially if you have many spokes. Not super relevant for your use case, but it also allows spokes to establish direct tunnels to bypass the hub as a full mesh. NHRP dynamic multipoint VPN originated in Cisco-land and is supported by a few other vendors, including VyOS.

IPsec/GRE is roughly the same as far as your needs go, exactly the same for performance and security, but it requires manual configuration on both ends for each point to point link.

Wireguard I’m not familiar with - it’s not supported by much vendor tin and I’ve never had the need. It’s well regarded and meant to be easy to configure & secure, at least on par with DMVPN or OpenVPN.

IMO for your usage, it’d just be overhead. Do you need layer 2 connectivity between prem and VPS ends? Might you want to bridge something from VPS into prem in future? You’ll be shrinking your MTU to get it. You can of course use the L3 VPN mesh as a VXLAN underlay with a little effort.

Honestly, overall you’d be better off getting direct services that can deliver BGP to a prem tail, or even diverse L2 into co-lo and finding peering partner(s). I’m guessing you’re aware of that already and they’re either not available or too expensive for your usage. You’re dealing in a lot of complexity for something that should be fairly straightforward.