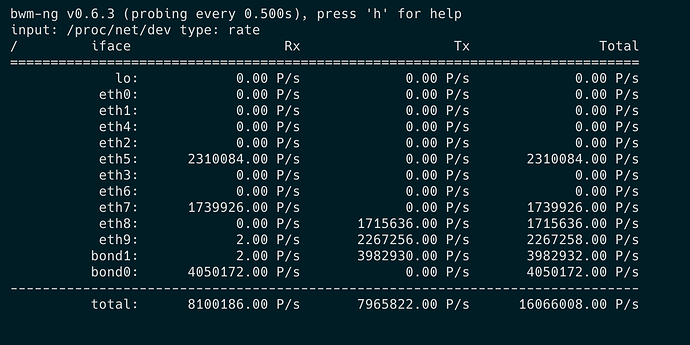

So, without XDP I am able to reach 4M pkt/s until it starts dropping packets. To reach 4M pkt/s I scaled up the to the 7 flows which sends 4.2M pkt/s.

root@test02-dut:/home/vyos# conntrack -L | grep -i offload | wc -l

conntrack v1.4.6 (conntrack-tools): 12 flow entries have been shown.

7