Hi all,

Issue description:

- uacctd process crashes when enabling flow-accounting on sub-interfaces such as eth0.2 and eth0.99.

- uacctd process doesn’t crash if flow-accounting is configured only for base interfaces such as eth0, eth1

- uacctd process crashes if both base interfaces and sub-interfaces are configured (in any combination of interfaces)

Error event from the /var/log/message log:

Dec 15 23:18:31 snow-rtr vyos-configd[743]: Received message: {"type": "init"}

Dec 15 23:18:31 snow-rtr vyos-configd[743]: config session pid is 7841

Dec 15 23:18:31 snow-rtr vyos-configd[743]: Received message: {"type": "node", "data": "/usr/libexec/vyos/conf_mode/flow_accounting_conf.py"}

Dec 15 23:18:31 snow-rtr vyos-configd[743]: Sending response 8

Dec 15 23:18:33 snow-rtr systemd[1]: Starting ulog accounting daemon...

Dec 15 23:18:33 snow-rtr systemd[1]: Started ulog accounting daemon.

Dec 15 23:18:33 snow-rtr systemd[2746]: opt-vyatta-config-tmp-new_config_7841.mount: Succeeded.

Dec 15 23:18:33 snow-rtr systemd[1]: opt-vyatta-config-tmp-new_config_7841.mount: Succeeded.

Dec 15 23:18:34 snow-rtr commit: Successful change to active configuration by user vyos on /dev/pts/0

Dec 15 23:18:34 snow-rtr kernel: [ 5801.984313] uacctd[8220]: segfault at 6 ip 00007fe92aa9c77e sp 00007ffc0a0c5ef8 error 4 in libc-2.31.so[7fe92aa1a000+14b000]

Dec 15 23:18:34 snow-rtr kernel: [ 5801.984358] Code: 4c 8d 0c 16 4c 39 cf 0f 82 63 01 00 00 48 89 d1 f3 a4 c3 80 fa 08 73 12 80 fa 04 73 1e 80 fa 01 77 26 72 05 0f b6 0e 88 0f c3 <48> 8b 4c 16 f8 48 8b 36 48 89 4c 17 f8 48 89 37 c3 8b 4c 16 fc 8b

Dec 15 23:18:34 snow-rtr systemd[1]: uacctd.service: Main process exited, code=killed, status=11/SEGV

Dec 15 23:18:34 snow-rtr systemd[1]: uacctd.service: Failed with result 'signal'.

vyos build:

Version: VyOS 1.4-rolling-202112150318

Release train: sagitta

Built by: autobuild@vyos.net

Built on: Wed 15 Dec 2021 03:18 UTC

Build UUID: 8549e513-cc55-41e0-afdc-b7aba3eb4a23

Build commit ID: 30422e3042965d

Architecture: x86_64

Boot via: installed image

System type: VMware guest

Hardware vendor: VMware, Inc.

Hardware model: VMware Virtual Platform

Hardware S/N: VMware-56 4d 85 75 f1 17 b8 96-30 ef 52 9e fb 38 fe 3a

Hardware UUID: 75854d56-17f1-96b8-30ef-529efb38fe3a

Copyright: VyOS maintainers and contributors

Interface Configuration

set interfaces ethernet eth0 hw-id '00:0c:29:38:fe:3a'

set interfaces ethernet eth0 vif 2 address 'dhcp'

set interfaces ethernet eth0 vif 99 address 'x.x.x.x/x'

set interfaces ethernet eth0 vif 99 description 'WAN-x.x.x.x/x'

set interfaces ethernet eth1 hw-id '00:0c:29:38:fe:44'

set interfaces ethernet eth1 vif 10 address 'x.x.x.x/x'

set interfaces ethernet eth1 vif 10 description 'Mgmt-x.x.x.x/x'

set interfaces ethernet eth1 vif 15 address 'x.x.x.x/x'

set interfaces ethernet eth1 vif 15 description 'Home-x.x.x.x/x'

set interfaces ethernet eth1 vif 20 address 'x.x.x.x/x'

set interfaces ethernet eth1 vif 20 description 'Storage-A-x.x.x.x/x'

set interfaces ethernet eth1 vif 21 address 'x.x.x.x/x'

set interfaces ethernet eth1 vif 21 description 'Storage-B-x.x.x.x/x'

set interfaces ethernet eth2 hw-id '00:0c:29:38:fe:4e'

set interfaces ethernet eth2 vif 98 address 'x.x.x.x/x'

set interfaces ethernet eth2 vif 98 description 'DMZ-x.x.x.x/x'

set interfaces ethernet eth2 vif 100 address 'x.x.x.x/x'

set interfaces ethernet eth2 vif 100 description 'GameVM - x.x.x.x/x'

flow-accounting configuration

set system flow-accounting buffer-size '256'

set system flow-accounting interface 'eth0.2'

set system flow-accounting netflow engine-id '100'

set system flow-accounting netflow max-flows '640000'

set system flow-accounting netflow sampling-rate '1000'

set system flow-accounting netflow server x.x.x.x port '2055'

set system flow-accounting netflow source-ip 'x.x.x.x'

set system flow-accounting netflow timeout expiry-interval '30'

set system flow-accounting netflow timeout flow-generic '3600'

set system flow-accounting netflow timeout icmp '300'

set system flow-accounting netflow timeout max-active-life '604800'

set system flow-accounting netflow timeout tcp-fin '300'

set system flow-accounting netflow timeout tcp-generic '3600'

set system flow-accounting netflow timeout tcp-rst '120'

set system flow-accounting netflow timeout udp '300'

set system flow-accounting netflow version '5'

/boot/rw/etc/pmacct/uacctd.conf

# Genereated from VyOS configuration <<<<<<<<<<<<<<< btw, you have a typo here "GenerEated"

daemonize: true

promisc: false

pidfile: /var/run/uacctd.pid

uacctd_group: 2

uacctd_nl_size: 2097152

snaplen: 128

aggregate: in_iface,src_mac,dst_mac,vlan,src_host,dst_host,src_port,dst_port,proto,tos,flows

plugin_pipe_size: 268435456

plugin_buffer_size: 268435

imt_path: /tmp/uacctd.pipe

imt_mem_pools_number: 169

plugins: nfprobe[nf_x.x.x.x],memory

nfprobe_receiver[nf_x.x.x.x]: x.x.x.x:2055

nfprobe_version[nf_x.x.x.x]: 5

nfprobe_engine[nf_x.x.x.x]: 100:0

nfprobe_maxflows[nf_x.x.x.x]: 640000

sampling_rate[nf_x.x.x.x]: 1000

nfprobe_source_ip[nf_x.x.x.x]: 10.0.10.254

nfprobe_timeouts[nf_x.x.x.x]: expint=30:general=3600:icmp=300:maxlife=604800:tcp.fin=300:tcp=3600:tcp.rst=120:udp=3000

‘Hardware’ Config

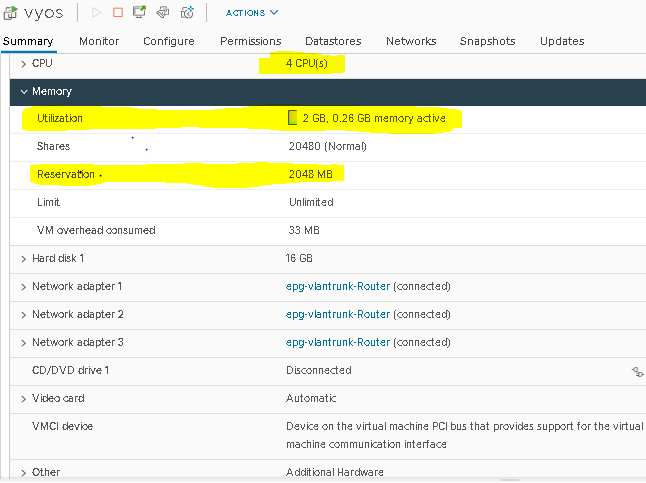

VMware VM, 4 vCPU, 2GB ram, 16GB+ SSD, vmxnet3 adapters.

vNIC info

13:00.0 Ethernet controller: VMware VMXNET3 Ethernet Controller (rev 01)

DeviceName: Ethernet1

Subsystem: VMware VMXNET3 Ethernet Controller

Physical Slot: 224

Control: I/O+ Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx+

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx-

Latency: 0, Cache Line Size: 32 bytes

Interrupt: pin A routed to IRQ 16

Region 0: Memory at fd2fc000 (32-bit, non-prefetchable) [size=4K]

Region 1: Memory at fd2fd000 (32-bit, non-prefetchable) [size=4K]

Region 2: Memory at fd2fe000 (32-bit, non-prefetchable) [size=8K]

Region 3: I/O ports at 6000 [size=16]

Expansion ROM at fd200000 [virtual] [disabled] [size=64K]

Capabilities: <access denied>

Kernel driver in use: vmxnet3

Kernel modules: vmxnet3

Storage space

Filesystem Size Used Avail Use% Mounted on

overlay 16G 1.3G 14G 9% /

Memory:

MemTotal: 2041776 kB

MemFree: 988476 kB

MemAvailable: 1102816 kB

Buffers: 13812 kB

Cached: 321508 kB

SwapCached: 0 kB

Active: 120112 kB

Inactive: 378636 kB

Active(anon): 2160 kB

Inactive(anon): 168132 kB

Active(file): 117952 kB

Inactive(file): 210504 kB

Unevictable: 10700 kB

Mlocked: 10700 kB

SwapTotal: 0 kB

SwapFree: 0 kB

Dirty: 468 kB

Writeback: 0 kB

AnonPages: 173432 kB

Mapped: 74024 kB

Shmem: 2868 kB

KReclaimable: 82924 kB

Slab: 152656 kB

SReclaimable: 82924 kB

SUnreclaim: 69732 kB

KernelStack: 4064 kB

PageTables: 3008 kB

NFS_Unstable: 0 kB

Bounce: 0 kB

WritebackTmp: 0 kB

CommitLimit: 1020888 kB

Committed_AS: 530000 kB

VmallocTotal: 34359738367 kB

VmallocUsed: 13048 kB

VmallocChunk: 0 kB

Percpu: 4544 kB

HardwareCorrupted: 0 kB

AnonHugePages: 53248 kB

ShmemHugePages: 0 kB

ShmemPmdMapped: 0 kB

FileHugePages: 0 kB

FilePmdMapped: 0 kB

DirectMap4k: 100224 kB

DirectMap2M: 1996800 kB

Troubleshooting:

- Tried with different vifs on different interfaces with same result

- Tried changing all the values, buffer sizes, samples sizes, disable-imt - nothing helped

- Rebooted the router, reinstalled fresh, tried manually restarting the services (if this were a physical server, I’d have probably thrown holy water at it, too).

- Cleared config, started fresh - no change.

- Checked the forums for similar errors - found a bug with VRFs but that particular individual was able to start netflow for sub interfaces that were not associated with VRF (and i don’t have VRFs set). Considering finding out which version he was running on and rolling back - but then again, I’d prefer to help fix a bug if this is one and run on the latest build if possible.

- Yes, I updated to the latest and greatest via add system image VyOS Community - but no cigar

I hope this info helps a bit - and thank you for helping out, it is much appreciated.

Kind regards,

Mate