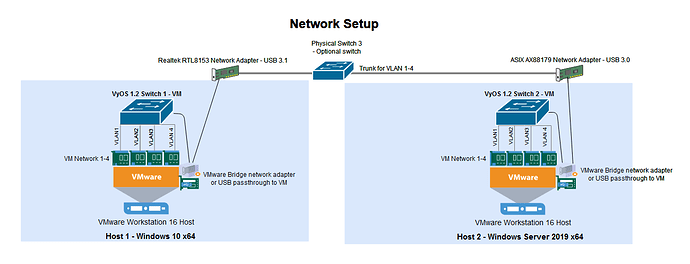

I have setup two VyOS VMs to operate as network switches with multiple VLANs. The vms run on Windows 10 and Windows Server 2019 Vmware Workstation hosts . The ethernet link operating as VLAN trunk on both switches is 1Gbs and using USB network adapters. The performance is roughly 80% on one host and 50% on the other host, if one end is direct physical network adapter. I’ve attempted some recommended performance tuning, however speed reduces but never increases. I assume this is the fastest the connection can operate given the hardware setup. When both VyOS vms are on either end of the link, the performance drops to around 20% of 1Gbs. It appears there could be a bug preventing the vms from communicating to each other at a reasonable speed. I have attempted to use the rolling images 1.3 to 1.4, which resulted to even further poor performance. Please assist with identifying any possible bugs.

Hello @0nemax, will be helpful if you draw topology.

I think your NICs don’t support SMP affinity, this might be critical for performance.

Hey @Dmitry, here is the network diagram.

I did try changing SMP affinity for trunk and VLAN interfaces using sample command given below. I could see the affinity has changed in htop as selected CPU’s were in use for each interface but no change in performance.

set interfaces ethernet eth0 smp_affinity 1

set interfaces ethernet eth0 smp_affinity 3,5

Additionally I tested using some of the following interfaces offload and queue options. There was no change in some instances reduced performance.

Temporary change:

ethtool -K eth0 sg on tso on

ethtool -K eth9 rx on tx on sg on tso off gso off gro off lro off ntuple on rxhash on

ethtool -G eth9 tx 4096 rx 4096

Peristent change:

echo “options ixgbe LRO=0,0 MQ=1,1 RSS=6,6 VMDQ=0,0 vxlan_rx=0,0” | sudo tee -a /etc/modprobe.d/ixgbe.conf

Temporary change:

sudo rmmod vmxnet3

sudo modprobe vmxnet3 disable_lro=1

Peristent change:

echo ‘options vmxnet3 disable_lro=1’ | sudo tee -a /etc/modprobe.d/vmxnet3.conf

Hello @0nemax, thanks for the diagram.

Why you change ixgbe, I think you don’t have HW for this module.

At first, try to figure out the capacity between VyOS routers, set both NIC to passthrough.

Also show please an output of the command for HW interfaces

sudo ethtool -l eth0

sudo ethtool -l eth9

*ixgbe (VMware E1000) change and vmxnet3 adapters change is troubleshooting associated with VMware network adapter type. I tried switch the VMware adapter types e1000,e1000e and vmxnet3 during troubleshooting. Change as information in VMware adapter troubleshooting [VMware Knowledge Base].

When both are connected as per the diagram, the maximum performance is around 160-170mbs.

USB NICs in passthrough mode. To make it clear physical interfaces are always on eth9 on both VyOS systems. Output is as follows.

vyos@SW1:~$ sudo ethtool -l eth9

Channel parameters for eth9:

Cannot get device channel parameters

: Operation not supported

vyos@SW2:~$ sudo ethtool -l eth9

Channel parameters for eth9:

Cannot get device channel parameters

: Operation not supported

@0nemax e1000/e1000e has a different module, this is not ixgbe/igb/i40e etc.

Your HW devices don’t have physical channel params, so RSS should not works. Try to use soft interrupts by the following command and run the bandwidth test again.

configure

set interfaces ethernet eth9 offload rps

commit

The main issue with your USB NICs, try to replace them with intel 82576 or intel i350

VyOS 1.2 does not support rps offload. I tried enabling rps offload in VyOS 1.3-1.4 with no improvements.

I have to use USB NICs as a lot of recently released laptops don’t have PCIE NICs.

Yes, this CLI feature was added in 1.3/1.4 rolling, for 1.2.x you need to change it via bash CLI

sudo su -l

echo "f" > /sys/class/net/eth0/queues/rx-0/rps_cpus

echo "f" > /sys/class/net/eth9/queues/rx-0/rps_cpus

I just had a chance to resume troubleshooting. Using the commands to adjust RPS resulted in more cores being utilized but throughput declined.

The USB NICs can operate at least 900Mbs when connected to Windows VMs via USB passthrough. I assume there is an underlying driver issue that is affecting the VyOS setup. I’ll continue doing some troubleshooting. Please advise of any further troubleshooting steps that could help identify any issues.

Try to clean Debian buster.

Tell us know if you find something else.

Ok I will post my findings once I complete the testing.