Thanks for checking out this thread. I have been working on a huge VyOS deployment alone for the past several months, and I feel like I need feedback from others in terms of design guidance and making sure I’m not setting any traps for the future.

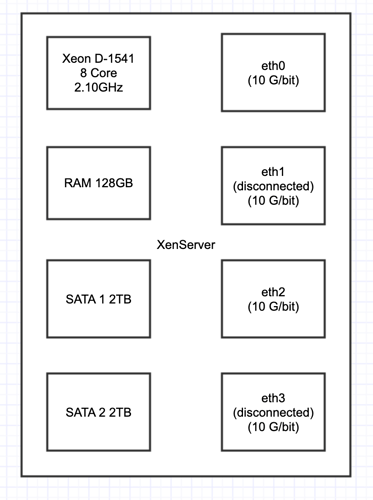

I have 4 of these physical boxes of the following configuration:

Each box is in a different rack in a different datacenter.

Each box has Layer 3 connectivity to the Internet and to the other 3 boxes over eth0. In other words, eth0 does not support VLANs, and the physical servers have to communicate via routers if they want to talk to eachother over eth0. Also, those routers on eth0 have mtu 1500 and so eth0 must be 1500 mtu on the phyiscal boxes or else they cannot communicate over eth0.

eth2 on the otherhand has layer2 connectivity between the servers, in otherwords, VLANs are supported and the physical boxes can communicate directly without any routers in the way, and can use 9000 mtu nicely!

Another difference between eth0 and eth2 is that on eth2 i can use any random autogenerated mac address I want and use any public or private IP i want with it. I can migrate that VM to any other physical box and the traffic follows virtual uninterrupted. eth0 on the otherhand, every IP is bound to a specific MAC address provided by the ISP, and they are bound to a specific physical host and so if a VM is migrated, those IPs will not work without a request to the ISP to move the subnet which is a lenghtly process. In otherwords, eth0 should be used for management and internet access only and eth2 should be used for storage and all VM traffic.

With this much ram and CPU power I can make as many VyOS VMs as I need to support the platform… I’m currently running several including vyos routers for Core, VPN, Proxy, and Access.

I decided to go with CentOS boxes to handle DNS and DHCP services, but VyOS is doing everything else right now including all routing, firewall, VPN, NAT, load-balancing, VRRP, OSPF, DHCP-relay and DNS Forwarding Cache.

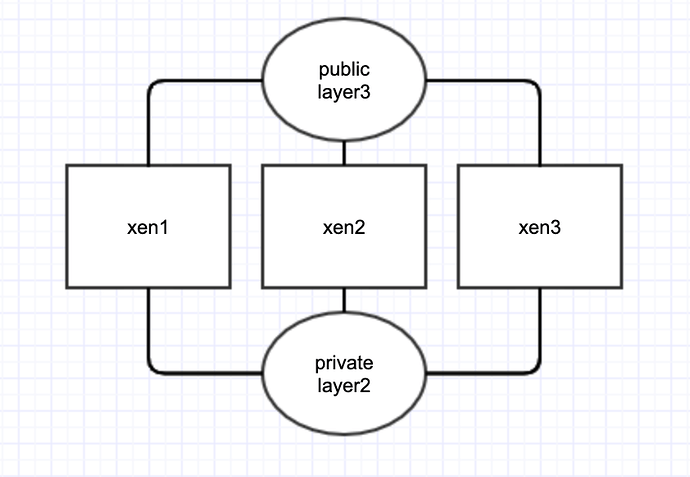

I am not sure that the current routing topology that I have is ideal, so I guess my goal should be to settle on a viable topology.

I have some limitations to work with besides the ones listed above… For instance: I do not need the VYOS VMs to be able to Live Migrate, but I do need my other VMs to be able to Live Migrate and I have an NFS shared storage server to store those VMs, while I plan on storing VyOS VMs on Local Storage (so that atleast the network stays up if the NFS goes down).

Each VM is limited to 7 virtual NICs, and the VyOS VM’s do not do any VLAN tagging. All the VLAN tagging is done by the hypervisor. I may need to support hundreds of VLANs in the near future, so having some “big” routers with tons of VLAN interfaces is out of the question. I will need a core/distro/access model (I think).

I “could” use SR-IOV here and make things run really slick by doing the VLAN tagging in the VyOS VMs, but I ran into some complications. Although I don’t need to live-migrate VyOS, using SR-IOV NICs makes it so that a VM cannot live-migrate, and if VyOS is on SR-IOV and regular VMs are not, then they cannot communicate with eachother unfortunately.

So I guess the question I pose to the community first is, if you were building this yourself, and you had these 4 physical boxes that you can do whatever you want with, how would you setup your core routing?