@tjh

20240705F@⪸AFTERNOON

No problem. If I’m overly verbose it’s because I know I lack the knowledge to explain/identify or identify a number of things, so it can be inferred from context what I failed to mention because I may have thought it wasn’t important. I’m not trying intentionally to waste anybody’s time. I’ll try to yap less though it’s kinda complicated when details are key. For what it’s worth, these posts take a long time, lots of edits, and a good 80% of the time never get sent at all.

Back to the problem, since the last time I had already moved on; and deleted the virtual machine too. {answer#4…✓. 9+}

Fortunately, I had made it a habit of saving the output of show configuration commands, specially about to jump ship. It shouldn’t take long to recreate the VM and fit it right where it was, even faster if I’d turned on vCenter to use VM templates but I’m taking another direction this time, it’s not nonsense, I promise. VyOS is on the only point on the network where there is no redundancy, so it is easier to pinpoint when something in that spot is the issue. {a#5…✓.}

I’m still not done but I think I’ve collected enough info to start and keep posting during the day.

{speaking of updates…}

TLDR

I found the problem, I’m still leaving everything for context which was meant as quick updates, until I forgot several in a series so it changed to sort of a log format. Just scrool way down.

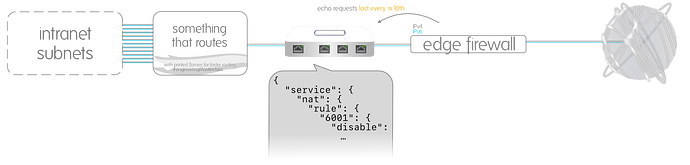

Network’s Own Burden on the Firewall

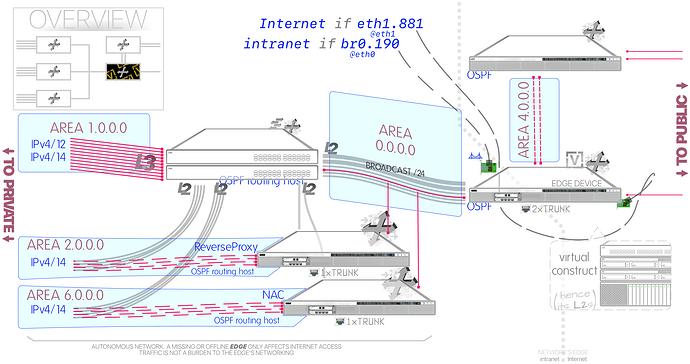

VyOS connects public and private networks, it’s the edge. It is not needed for anything in the intranet unless it involves the Internet, the network is otherwise autonomous; DHCP, DNS, routing, NTP, reverse proxies, internal NAT, all that, all there.

The interfaces are 10Gbit/s adapters, from that bandwidth at the most a tenth can be ever utilized because it’s what my ISP offers, a single gig uplink, and it doesn’t have any other bandwidth intensive task. I know bandwidth isn’t the sole metric to worry about, but with utilization of a tenth from the total even if it was a black market counterfeit like fake iPhones I’m confident there must be resources to spare in the adapter, MBUFs or whatever these are.

These aren’t fake iPhones though, they are server-grade cards I got for these tests. They must be or vSphere wouldn’t recognize them.

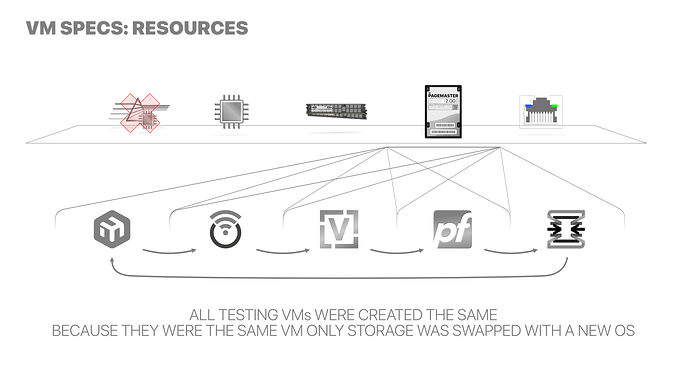

Since I’ve pinpointed how the problem manifests; duckduckgo.com won’t load among other sites. That is what I tested on five different firewalls. Same everything, so none of them would have an advantage over the other.

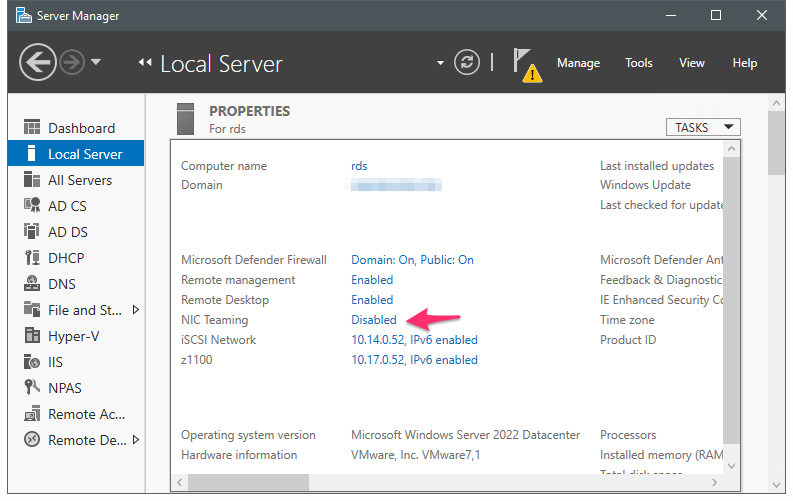

Using the same VM, I’d only swap out the disk.

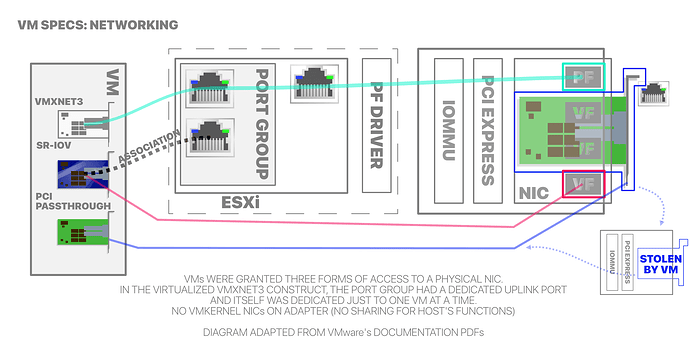

Access to the physical NIC was exclusive to the VM. In virtualized form, the NIC was put in its own virtual switch with a single trunk port group, connecting only a single VM. The other methods I used to set the adapter were through SR-IOV and PCI passthrough.

Topology

Directly Physically Attached Network, Interfaces

Directly Physically Attached Network, Interfaces

eth0

│ ┌──────┐

br0 ┬──┤ VyOS ├──┬ eth1

vif190 ┬┘ └──────┘ └┬ vif881

(of:/24) 1xIPv4 ─┘ └┬ pppoe0

└─ 1xIPv4 (of:/32)

Directly Attached Networks, Logical

Directly Attached Networks, Logical

┌──────┐

│ ├ ─ 1x 6in4 Hurricane Electric IPv6/64(128) transit

│ VyOS │ (L3tun for for routed IPv6/48 block)

│ ├ ─ 1x OSPF-ECMP link: 2xIPv4/31 /L3tuns

1x IPv4/24 subnet ──┤ ├── 1x PPP IPv4/32, IPv6 in PPP disabled

1x IPv6/120 subnet └──────┘

┌────────────────────────┐

│No hosts can be directly│

│be (other than routers)│

│ reached by the router. │

└────────────────────────┘

or

JSON… it is JSON, right? Whatever it is.

bridge br0 {

enable-vlan

member {

interface eth0 {

allowed-vlan 1-4094

native-vlan 1

}

}

vif 9 {

address 2001:470:8085:900::1/120

}

vif 190 {

address 10.190.0.1/24

description zbe00

}

}

ethernet eth0 {

hw-id 00:50:56:be:00:01

offload {

gro

gso

sg

tso

}

}

ethernet eth1 {

hw-id 00:50:56:bf:ee:01

offload {

gro

gso

sg

tso

}

# C vif 191 {

# U address dhcp

# R dhcp-options {

# R client-id routelogic

# E host-name routelogic

# N }

# T }

vif 881 {

}

}

pppoe pppoe0 {

authentication {

password "somepassword"

username "tmx34577355743@prodigyorsomething"

}

no-peer-dns

source-interface "eth1.881"

}

loopback lo {

}

tunnel tun0 {

address 2001:db8:005:ab1::2/64

description wzHE

encapsulation sit

remote 72.52.104.74

source-address 192.168.191.2

}

wireguard wg1 {

address 192.168.91.1/31

peer cloudfront1 {

address X.X.X.X

allowed-ips 0.0.0.0/0

persistent-keepalive 12

port 4491

public-key XXX

}

private-key XXX

}

wireguard wg2 {

address 192.168.92.1/31

peer cloudfront2 {

address X.X.X.X

allowed-ips 0.0.0.0/0

persistent-keepalive 8

port 4492

public-key XXX

}

private-key XXX

}

20240706S@≈NOON

I forgot to send the draft I had written, got sidetracked all night making diagrams. I’m starting testing. Check back soon.

20240707U@≈EVENING

Forgot again to post the thing. But I see zero improvements, I just thought on one last thing to try that I tried elsewhere for reasons but I’M too sleep deprived.

I’ll just [loosely] timestamp this like a log of someone lost at sea — VyOS does sound like something maritime — and send it all at once when I’m done. I’m starting to grow a beard so it’s fitting.

{No longer important, NEEDS CLEANUP B4 POST}

1 = 2 …and 3, and 4, and 5 (Results)

The only differences between platforms were:

- Mikrotik’s CHR can only use IDE disk controllers, boots BIOS.

- OpenWRT is deployed using a temporary VM, can boot in either BIOS or EFI. Like VyOS, it supports VMware’s paravirtual SCSI controller fully. Thus it would become VyOS next.

- VyOS’ installer can overwrite the disk and needs zero changes from OpenWRT before it.

- The BSDs — at the forefront of ZFS development — have so. many, disk. related. issues. it’s not funny. No wonder they had to adopt such a resilient storage format. Knocked down the SCSI controller to an old thing and it was back on business. Between them is like switching back and forth from OpenWRT and VyOS, no changes are needed on the VM at all.

All changes related to storage. None to networking. So this seems largely inconsequential/irrelevant but I’m just being thorough.

Overall, they all yielded the same results as the standard conditions would afford.

That said, all of the other platforms tested don’t have this traffic-dropping problem neither in standard or these controlled-ish conditions, but the problems they may have of their own remained there. Which I took as sign of consistency and more or less as validation of the tests, as unscientific as this all may be.

Examples/Observations

The senses, OPNsense and pfSense, can route the full gigabit/s uplink if something else handles NAT before traffic passed through them and as long as their ruleset is limited by a single catch-all rule or something just as complicated (or lackthereof).

This information was key to fix the problem.

The minute NAT or a standard interface[-and-not-host]-based ruleset is added, performance tanks. It’s reduced reduced to about half of the total bandwidth. It may be tuned and tweaked obsessively, and the VM could be made as big as a SharePoint farm but the improvements are barely noticeable.

Considering that:

- CPU doesn’t spike as reported by the guest OS.

- CPU doesn’t spike as reported by the hypervisor.

- These machines were setup to run in RAM with no logging to eliminate storage as the bottleneck.

- Migrated the VM to host with a much more powerful CPU, leave the same ridiculously large amount of cores and memory, and,

I got the same results each time. I thought it was a hardware issue. It should be corrected in the most favorable conditions they’ve (these OSes) ever been where they no longer have >20 interfaces but only a couple plus some basic 1-to-1 tunnels.

On the other hand, Linux-based routers, if this was a competition, would have completely obliterated the senses. They all exceeded 1G. I have no idea how because the interface (on the ONT) is supposed to be 1G max. Maybe overhead calculations, or the bursty nature of it since it was just by .1 and only briefly before stabilizing in 1G, IDK. I do know that that suggests that processor and networking hardware are able to handle a single gigabit per second of data transfer, no surprise there.

All Linux routers were zippy and frugal in resource-consumption, as usual.

In OpenWRT, I’ve noticed in the past that occasionally it would leak (allow) traffic through the firewall that’s explicitly set to be rejected. This happened during testing as too… eventually. Normally it takes a long time before it manifests itself, but I got “lucky” this time.

And then there’s VyOS. What it does, or rather what it doesn’t do, is load sites. But it’s specific for only some sites, others load fine.

duckduckgo.com in particular got my attention. I know that has some kind of relationship with Microsoft. Which I block access to at the ASN level and on DNS. so I didn’t thought much of it at first until I snapped out of it and remember the ASN-based layer 3 filter is not set up on VyOS. I only have the DNS filter available. I checked my resolvers to see if there was a block for duckduckgo.com but there wasn’t.

As ignorant as I am in Wireshark, I took a capture, and at the same time I started another from Little Snitch. I managed to clean up the one I got from Wireshark to the relevant packets but since Little Snitch takes its data from the Berkeley Packet Filter which as I understand does it very early in the upper layers before the data gets a network address therefore it’s all fake, and it was a little too much to clean up. If you’re in the mood though: the destination address duckduckgo.com is the one thing that’s correct. It’s the only one that starts with fifty-something. The other capture is like 10 packets only. Here are both:

Little Snitch: https://fetch.vitanetworks.link/static/embed/vyos/firefox-little-snitch.pcap

Wireshark: https://fetch.vitanetworks.link/static/embed/vyos/noise-trimmed.pcapng

interfaces ethernet detail

{6.}

Taken when the PPPoE interface still existed, the problem was occurring at that moment.

vyos@vyos# run show interfaces ethernet detail

eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master br0 state UP group default qlen 1000

link/ether 00:50:56:be:00:01 brd ff:ff:ff:ff:ff:ff

altname enp11s0

altname ens192

RX: bytes packets errors dropped overrun mcast

1510980452 3153534 0 455 0 476201

TX: bytes packets errors dropped carrier collisions

1236465533 947764 0 0 0 0

eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:bf:ee:01 brd ff:ff:ff:ff:ff:ff

altname enp19s0

altname ens224

inet6 fe80::250:56ff:febf:ee01/64 scope link

valid_lft forever preferred_lft forever

RX: bytes packets errors dropped overrun mcast

1260670287 1051369 0 302 0 985

TX: bytes packets errors dropped carrier collisions

85748296 737956 0 0 0 0

eth1.881@eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 00:50:56:bf:ee:01 brd ff:ff:ff:ff:ff:ff

inet6 fe80::250:56ff:febf:ee01/64 scope link

valid_lft forever preferred_lft forever

RX: bytes packets errors dropped overrun mcast

1242138952 999217 0 0 0 55

TX: bytes packets errors dropped carrier collisions

85747470 737949 0 0 0 0

MAC addresses do not correspond to anything physical…or virtual. vCenter, where the range is from, hasn’t been booted up in a while, but they’re already mapped in DHCP, so…yeah.

20240708M@≈DAWN

Found more non-working domains; help.apple.com, hub.libreoffice.org, there’s another but I might get banned or at least reprimanded if I mention it, I got a few words censored already. =P

20240708M@≈AFTERNOON

OMFG, it worked! Pursuing the hunch I had paid off. It’s been around 3yr since I’ve been wanting to switch to VyOS. At the time of writing this sentence, the document is nine pages long though. I’m done testing but this might take another day.

I’m also just realizing the image I have of VyOS is the same I had at the original post. 6 months old.

# run show system image

Name Default boot Running

------------------------ -------------- ---------

1.5-rolling-202401030023 Yes Yes

$ show system image

Name Default boot Running

------------------------ -------------- ---------

1.5-rolling-202407090020 Yes Yes

1.5-rolling-202401030023

Ironically what started this, a UniFi gateway, is the only place where I got better results. In the one place where hardware cannot be improved, but thanks to VyOS, I unexpectedly learned to configure UniFi gateways from scratch without their Controller nonsense and when I realized this I went and I did just that.

I put this UniFi gateway, the first, cheapest and now discontinued model rocking half a gig of RAM, two 500MHz processor cores, and with my new found expertise, I got it routing and natting at full line speed, and doing dynamic routing which to date isn’t supported by UI, formerly Ubiquiti Networks, on their most allmightiest models, and no longer it dropping traffic.

But somehow using their controlled experienced Controller I can’t get those results or half the functionality which I was only able to unlock because of VyOS. If the software wasn’t so outdated, I’d very much stick with it.

20240708M@≈EVENING

Major effup in the update. This is never going to end.

20240710@NOON

On page 13. Outlook on the Web, AKA OWA, AKA Exchange’s webmail is now available from outside the network complete with the back and forth needed to log in through ADFS and pass through all the proxies and several layers deep in the intrastructure. It’s basically a success. My brain not so much, it’s a bit scattered, but I’ll want to finish already so here’s what I found:

Back on OpenWRT, one of its quirks is that it sees itself on the network and it would print it in the console over and over. I could use macvlans to work around it, but I’m already doing a switch, then sub-interface this and that, because of PPPoE. What if I move it thought?

…NAT it elsewhere and pass it down to OpenWRT…

I knew it had to be a Linux-based router, they’re the lightest and fastest. OpenWRT is out of the question because it would need two interfaces and the problem would repeat itself. Mikrotik’s CHR is good, but a I have only one license and it’s already busy. It would have to do more stuff to justify it and its current task is way too important to abandon. VyOS mysteriously drops traffic.

What about UniFI? I thought, followed quickly of remembering that it doesn’t do full cone NAT. That would be an issue. Just as fast I also remember that where I need to NAT full cone is on my static addresses which are routed-in in a tunnel already full cone natted and can bypass the UniFi gateway. And so I solved the issue. And when I started these tests this week, the UniFi gateway still had that config.

I reconfigured VyOS with static addressing on the WAN, gateway and all that, went to duckduckgo.com and it loaded the site. Finally!

There’s dynamic routing on the network, what if I missed something.

I double checked, all good. I reverted the configuration to see if I was not imagining things and confirm it would both failed again and succeed again. It did and it did.

So there it is.

NAT was the culprit, though now that I think about it, it could also be PPPoE, the things I abstracted from VyOS.

I updated the system since then, but I don’t feel like poking the bear right now, I just want to sleep.

![]() it’s basically science.

it’s basically science. ![]()

![]() point is, after science, but also that maybe they’re related in more that the fork between devs that brought them into the world.

point is, after science, but also that maybe they’re related in more that the fork between devs that brought them into the world.![]() )

)![]()

![]()