Hi everyone,

I’ve started using Wireguard configured on VyOS and I’m trying to tune it and see how far I can push it with my current box.

Its a cheap 4 core Intel Celeron N3150 CPU, however I’m been pretty happy with it, easily doing well over 1Gbit with a few firewall rules, nat, etc.

Also, for reference, on the WAN interface, I have gro, gso, lro, sg, tso offloads enabled.

The Interface is an Intel X520.

Regarding Wireguard, it’s working well and I don’t have many complains.

VyOS internet connection where Wg is configured is a 1000Mbit down, 400 Mbit up.

The setup is simple, the client is a phone, which can do 400Mbits down and up with speedtest.net connected directly to the internet.

When I connect Wireguard, with route all (0.0.0.0/0), the speedtest drops to around 95Mbits download and 110Mbits upload.

I’ve tested setting the wg01 interface MTU to 1400 and setting the firewall option “adjust-mss” on the same interface too, to 1400 as well, no change.

With htop, I was able to see that it’s hitting a single core a lot harder than the others.

I have 1 core maxed out, while the other 3 cores are at around 55% usage.

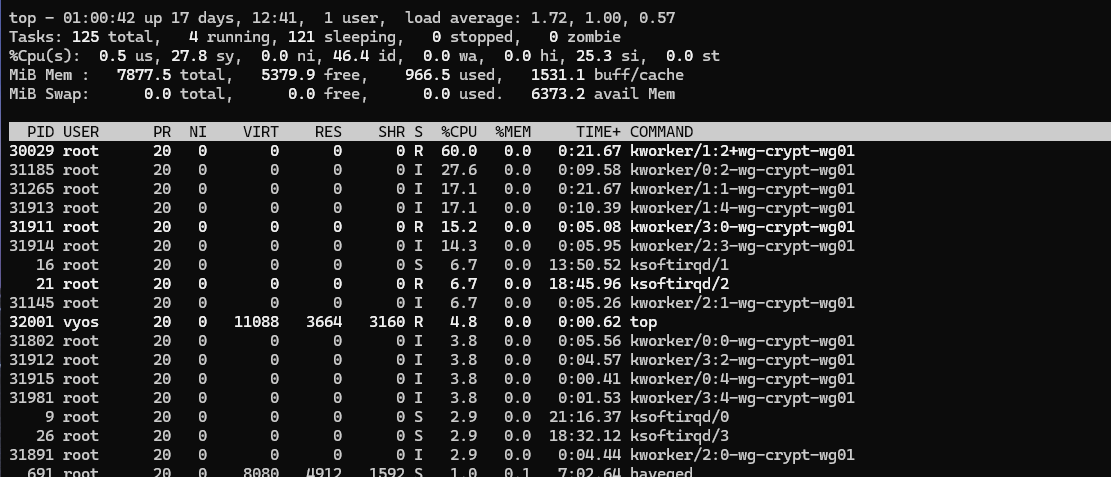

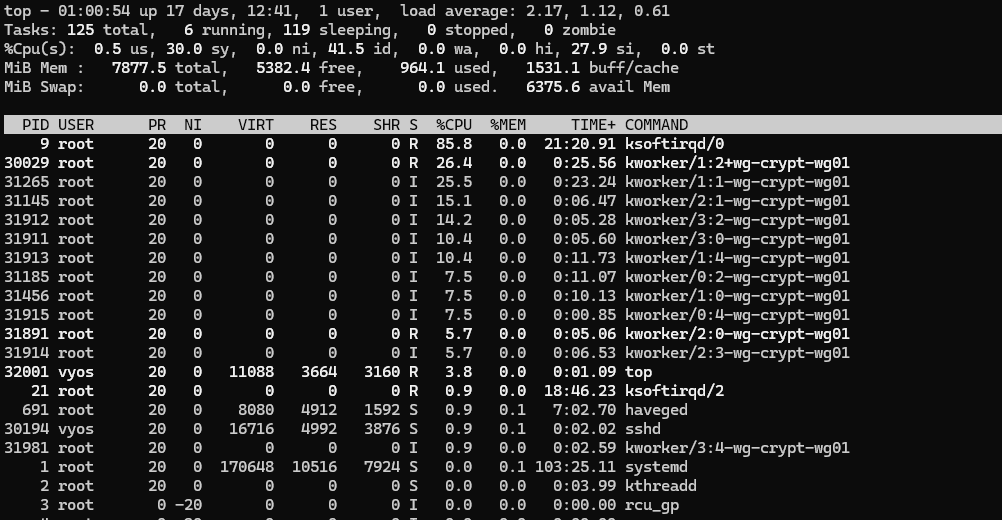

I’ve checked further with normal top, as htop was not showing all processes for some reason.

During download phase of the speedtest on the phone, I see that the majority of CPU usage is taken by kworkers of “wg-crypt-wg01”

While on the upload phase of the speedtest on the phone, the limit factor seems to be interrupts, as the main process is ksoftirqd/0.

I’m looking for any ideas or guidance on how I could optimize this a bit and have a better spread of CPU usage, specially regarding the upload phase with interrupts.

Thanks

UPDATE 1:

I seems that the high interrupts on a single core is not just an issue with Wireguard.

Doing a speedtest from my workstation (that is beind the VyOS router), during the Download phase, I get a single core maxed out by interrupts and the other 3 at around 20%.

During the Upload phase, barely any CPU usage.