@Viacheslav Do you have any options/suggestions in your mind which I can try ? I Can confirm that crypto operations for ipsec works efficiently.

@Viacheslav Also macsec configured manually on vyos 1.2.5 version gives performance of around 1.5 Gbps.

Thanks for all this work in finding what part caused the performance degradation.

I spent some hours to find out why this happened in the code - you can read more here: ⚓ T3619 Performance Degradation 1.2 --> 1.3 | High ksoftirqd CPU usage

Hi all!

I have the same problem. I’m running vyos 1.3 epa1 in a kvm vm on a very low power host (pcengines apu4d4).

At about 60mbit/s I have 100% softirqd load in the VM on only one core and it bottlenecks there. pfsense/opensense had no problems with 80mbit (my connection speed) without significant CPU load.

I know its a low power board, so I spun up a debian VM and tested with iperf3. Inside the debian VM I can do almost gigabit (~920mbit/sec) - so my 80mbit Internet connection shouldn’t be an issue.

I tried the tricks and workarounds in this thread but nothing helped.

Running on KVM with virtio network enabled.

Any hint where I can look for the problem?

Many Thanks!

Are you sure it’s the SAME problem?

Have you tried a Vyos 1.2.x image and it doesn’t show the issue?

Did you set up any kind of routing when you tried it in a Debian VM? Because just sending network traffic will barely use any CPU, it’s as soon as you start routing traffic it gets heavy.

No, I have not yet tried if 1.2 has the same issue, it was a fresh 1.3 install.

No, I did not set up any kind of routing in the Debian VM. I thought the softirq is comming from the interface, therefor I just hit it with iperf.

Opensense was perfectly fine routing that kind of traffic on this machine, I thought vyos should have no issues since its running linux instead of bsd.

Also, when I do a speedtest only 1 out of 4 cores are used. Is this normal?

Thanks!

Hi!

I’ve done a bit more testing and set multiqueue on my kvm hypervisor the the number of cores I have (4).

Checking with cat /proc/interrups now every virtio interface as 4 input/output interrupts, so that is working correctly.

It did improve the situation a little bit, but not a lot. I still have one core with 100% softirqd, but checking /proc/interrupts I can see that all interface interrupts get distributed to all four cores equally. So there has to be something else that is just utilizing one core.

Does anyone have an Idea what I can check next? Or are there some services on vyos which are known to only single-thread?

Many thanks!

When you had pfSense/Opnsense were they running under the Hypervisor, or on the bare metal directly?

My thinking for asking this is wondering if it’s worth turning offloading on/off on the Hypervisor interfaces themselves, not on the VM interfaces

Is your Internet connection PPPoE or just direct IP?

opnsense was also running under the hypervisor.

Internet Connection is PPPoE.

offloading on/off on the hypervisor should only change the irqload on the hypervisor itself and not in the vm, right? Because I can see the softirq load only in the vyos VM.

Any chance to find out what exactly is causing this singlethreaded irqload?

Hello @erfus , can you confirm that you enable RPS on the WAN interface?

set interfaces ethernet eth0 offload rps

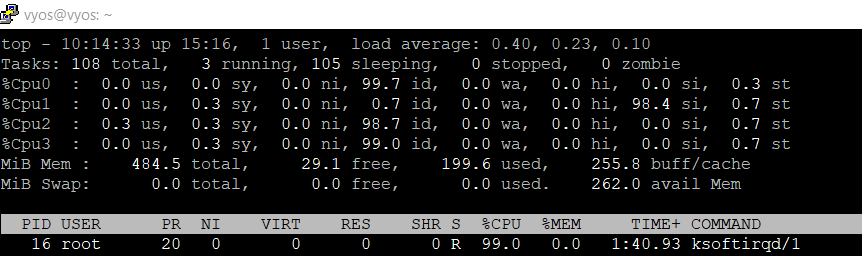

Also, provide please top screenshot and press 1 when you doing a speedtest.

Hi @Dmitry

Thanks!

on eth0 I’ve enabled offload rps, gro, gso, sg, tso. Its running on proxmox in KVM.

See screenshot of top attatched.

Thanks!

@erfus share please:

sudo cat /proc/interrupts

sudo cat /sys/class/net/eth0/queues/rx-0/rps_cpus

sudo cat /sys/class/net/eth0/queues/rx-1/rps_cpus

sudo cat /sys/class/net/eth0/queues/rx-2/rps_cpus

sudo cat /sys/class/net/eth0/queues/rx-3/rps_cpus

- Try please add manually the following commands and run speedtest again

echo "f" > /sys/class/net/eth0/queues/rx-1/rps_cpus

Also provide top and press 1 screenshot again

@Dmitry thanks!

vyos@vyos:~$ sudo cat /proc/interrupts

CPU0 CPU1 CPU2 CPU3

0: 10 0 0 0 IO-APIC 2-edge timer

1: 0 0 0 9 IO-APIC 1-edge i8042

8: 1 0 0 0 IO-APIC 8-edge rtc0

9: 0 0 0 0 IO-APIC 9-fasteoi acpi

10: 0 0 31012 0 IO-APIC 10-fasteoi virtio0

11: 0 0 0 0 IO-APIC 11-fasteoi uhci_hcd:usb1

12: 0 0 5 0 IO-APIC 12-edge i8042

14: 0 0 0 0 IO-APIC 14-edge ata_piix

15: 0 0 60990 0 IO-APIC 15-edge ata_piix

24: 0 0 0 0 PCI-MSI 81920-edge virtio1-config

25: 0 0 0 0 PCI-MSI 81921-edge virtio1-control

26: 0 0 0 0 PCI-MSI 81922-edge virtio1-event

27: 1220 0 0 0 PCI-MSI 81923-edge virtio1-request

28: 0 1247 0 0 PCI-MSI 81924-edge virtio1-request

29: 0 0 1576 0 PCI-MSI 81925-edge virtio1-request

30: 0 0 0 1106 PCI-MSI 81926-edge virtio1-request

31: 0 0 0 0 PCI-MSI 294912-edge virtio2-config

32: 16554 0 0 0 PCI-MSI 294913-edge virtio2-input.0

33: 55 1 0 0 PCI-MSI 294914-edge virtio2-output.0

34: 0 554826 1 0 PCI-MSI 294915-edge virtio2-input.1

35: 0 307946 0 2 PCI-MSI 294916-edge virtio2-output.1

36: 1 0 595955 0 PCI-MSI 294917-edge virtio2-input.2

37: 0 1 520220 0 PCI-MSI 294918-edge virtio2-output.2

38: 0 0 1 436081 PCI-MSI 294919-edge virtio2-input.3

39: 0 0 0 244459 PCI-MSI 294920-edge virtio2-output.3

40: 0 0 0 0 PCI-MSI 311296-edge virtio3-config

41: 26601 1 0 0 PCI-MSI 311297-edge virtio3-input.0

42: 222 0 1 0 PCI-MSI 311298-edge virtio3-output.0

43: 0 288878 0 1 PCI-MSI 311299-edge virtio3-input.1

44: 1 1350720 0 0 PCI-MSI 311300-edge virtio3-output.1

45: 0 1 653067 0 PCI-MSI 311301-edge virtio3-input.2

46: 0 0 1132279 0 PCI-MSI 311302-edge virtio3-output.2

47: 0 0 0 266870 PCI-MSI 311303-edge virtio3-input.3

48: 1 0 0 859129 PCI-MSI 311304-edge virtio3-output.3

49: 0 0 0 0 PCI-MSI 327680-edge virtio4-config

50: 1704 0 1 0 PCI-MSI 327681-edge virtio4-input.0

51: 5 0 0 1 PCI-MSI 327682-edge virtio4-output.0

52: 1 17951 0 0 PCI-MSI 327683-edge virtio4-input.1

53: 0 17851 0 0 PCI-MSI 327684-edge virtio4-output.1

54: 0 0 21513 0 PCI-MSI 327685-edge virtio4-input.2

55: 0 0 22369 1 PCI-MSI 327686-edge virtio4-output.2

56: 1 0 0 17234 PCI-MSI 327687-edge virtio4-input.3

57: 0 1 0 17176 PCI-MSI 327688-edge virtio4-output.3

NMI: 0 0 0 0 Non-maskable interrupts

LOC: 335221 2143172 710934 1955060 Local timer interrupts

SPU: 0 0 0 0 Spurious interrupts

PMI: 0 0 0 0 Performance monitoring interrupts

IWI: 0 0 0 0 IRQ work interrupts

RTR: 0 0 0 0 APIC ICR read retries

RES: 208598 113816 89235 114441 Rescheduling interrupts

CAL: 1412 18461 14562 16236 Function call interrupts

TLB: 1816 1715 2267 1845 TLB shootdowns

TRM: 0 0 0 0 Thermal event interrupts

THR: 0 0 0 0 Threshold APIC interrupts

DFR: 0 0 0 0 Deferred Error APIC interrupts

MCE: 0 0 0 0 Machine check exceptions

MCP: 190 190 190 190 Machine check polls

ERR: 0

MIS: 0

PIN: 0 0 0 0 Posted-interrupt notification event

NPI: 0 0 0 0 Nested posted-interrupt event

PIW: 0 0 0 0 Posted-interrupt wakeup event

vyos@vyos:~$ sudo cat /sys/class/net/eth0/queues/rx-0/rps_cpus

e

vyos@vyos:~$ sudo cat /sys/class/net/eth0/queues/rx-1/rps_cpus

0

vyos@vyos:~$ sudo cat /sys/class/net/eth0/queues/rx-2/rps_cpus

0

vyos@vyos:~$ sudo cat /sys/class/net/eth0/queues/rx-3/rps_cpus

0

The last one gives me a permission denied:

vyos@vyos:~$ sudo echo "f" > /sys/class/net/eth0/queues/rx-1/rps_cpus

-vbash: /sys/class/net/eth0/queues/rx-1/rps_cpus: Permission denied

Thanks!

I managed to get the last command working by using “sudo su -l”. Don’t know why sudo alone wasn’t enough.

Anyway, still irqload on only one core.

Just try

sudo su -l

echo "f" > /sys/class/net/eth0/queues/rx-1/rps_cpus

Yes, I’ve set it.

Did not make any chance, still only one core with softirq.

I also tried setting “f” for rx[0-3] which did not make a difference.

Also just tried setting “ff” for rx[0-3], again did not make any difference.

So you have speedtest only with one flow.

Try to set:

configure

set system option performance throughput

commit

It looks very strange, try to install linux-tools for required kernel and provide perf top output